NLP methodology development for analyzing communication patterns between institutional researchers and community scientists

Developed a comprehensive natural language processing methodology for analyzing stakeholder interview transcripts in environmental advocacy research. This proof-of-concept demonstrates how computational text analysis techniques can complement qualitative research methods and reveal communication patterns between different stakeholder groups.

The project showcases advanced NLP pipeline development, combining multiple analysis techniques to extract insights from interview data and establish a framework for larger-scale policy research studies.

Implemented for informal interview text with compound scoring

Topic modeling implementation for thematic analysis

Domain-specific stopword expansion and lemmatization

Word clouds and statistical summaries by stakeholder group

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Environmental Advocacy Sentiment Analysis

Graduate Capstone Research Project

"""

import pandas as pd

import nltk

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

from nltk.stem import WordNetLemmatizer

from vaderSentiment.vaderSentiment import SentimentIntensityAnalyzer

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.decomposition import LatentDirichletAllocation

from wordcloud import WordCloud

import matplotlib.pyplot as plt

# Download NLTK resources

nltk.download('punkt')

nltk.download('stopwords')

nltk.download('wordnet')

# Load the data

df = pd.read_csv('/Users/courtneyj/Documents/Columbia/Fall 2024/NECR/Analysis/transcript.csv')

# Text preprocessing with additional stopwords

def preprocess_text(text):

# Default NLTK stopwords

stop_words = set(stopwords.words('english'))

# Add custom filler words to stopwords

custom_stopwords = {'actually', 'mean', 'think', 'like', 'right', 'kind', 'sort',

'got', 'maybe', 'really', 'say', 'way', 'lot', 'one', 'two'}

stop_words.update(custom_stopwords)

# Initialize lemmatizer

lemmatizer = WordNetLemmatizer()

# Tokenize and clean text

tokens = word_tokenize(text.lower())

tokens = [lemmatizer.lemmatize(word) for word in tokens

if word.isalpha() and word not in stop_words]

return ' '.join(tokens)

# Apply the updated preprocessing function

df['clean_text'] = df['text'].apply(preprocess_text)

# Sentiment Analysis

analyzer = SentimentIntensityAnalyzer()

def analyze_sentiment(text):

score = analyzer.polarity_scores(text)

return score['compound'] # Overall sentiment score

df['sentiment'] = df['clean_text'].apply(analyze_sentiment)

# Summarize sentiment by group

sentiment_summary = df.groupby('group')['sentiment'].describe()

print(sentiment_summary)

# Histogram for Community Science Group Only

plt.figure(figsize=(10, 6))

community_sentiment = df[df['group'] == 'Community']['sentiment']

plt.hist(community_sentiment, bins=10, alpha=0.7, color='blue', label='Community Science')

plt.title('Sentiment Distribution for Community Science Group')

plt.xlabel('Sentiment Score')

plt.ylabel('Frequency')

plt.legend()

plt.savefig('community_sentiment_histogram.png')

plt.show()

# Topic Modeling (LDA)

vectorizer = CountVectorizer(max_df=0.9, min_df=2, stop_words='english')

dtm = vectorizer.fit_transform(df['clean_text'])

lda = LatentDirichletAllocation(n_components=5, random_state=42)

lda.fit(dtm)

# Display top words per topic

def display_topics(model, feature_names, num_top_words):

for idx, topic in enumerate(model.components_):

print(f"Topic {idx+1}:")

print([feature_names[i] for i in topic.argsort()[-num_top_words:]])

display_topics(lda, vectorizer.get_feature_names_out(), 10)

# Word Cloud for Each Group

for group in df['group'].unique():

group_text = ' '.join(df[df['group'] == group]['clean_text'])

wordcloud = WordCloud(background_color='white', max_words=100, width=800, height=400)

wordcloud.generate(group_text)

plt.figure(figsize=(10, 6))

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis('off')

plt.title(f"Word Cloud for {group.capitalize()} Group")

wordcloud.to_file(f'{group}_wordcloud.png')

plt.show()

# Export Results

df.to_csv('sentiment_analysis_results.csv', index=False)The VADER sentiment analyzer successfully processed interview transcripts, revealing distinct communication patterns between stakeholder groups. This proof-of-concept establishes the methodology for analyzing emotional tone in policy research interviews.

Pattern: Consistently measured tone

Approach: Technical, solution-oriented language

Professional communication style aligned with policy discourse norms

Pattern: Emotionally varied responses

Approach: Personal experience-driven language

Communication reflecting direct engagement with local environmental challenges

Implemented LDA topic modeling to demonstrate thematic analysis capabilities. The methodology successfully identified shared vocabulary and distinct focus areas between stakeholder groups, establishing a framework for larger-scale analysis.

Both groups emphasized data collection infrastructure

Different approaches to policy and permitting challenges

Shared focus on monitoring accuracy and reliability

Community focus on immediate, localized effects

Institutional emphasis on systematic approaches

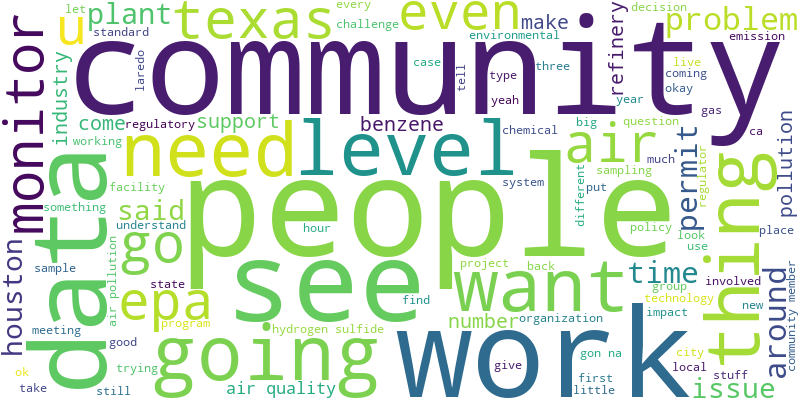

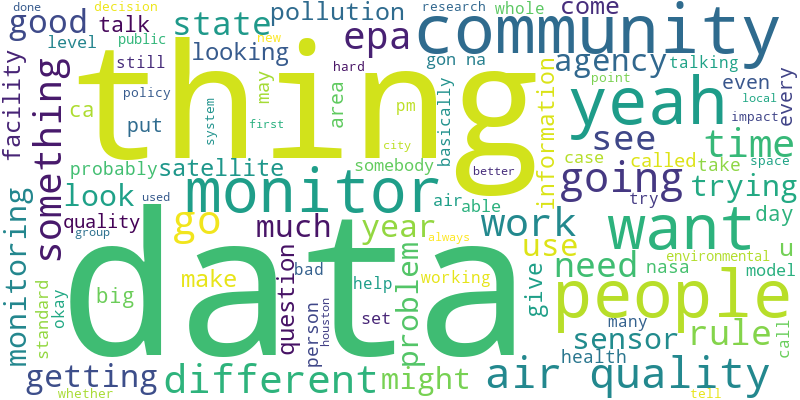

Generated word clouds and statistical visualizations demonstrate the methodology's ability to reveal distinct language patterns between stakeholder groups.

Word frequency analysis reveals focus on community, people, permit, and pollution, indicating grassroots advocacy approaches and regulatory challenge navigation.

Language patterns emphasize data, project, state, and EPA, reflecting structured, policy-oriented approaches within governmental frameworks.

The NLP pipeline successfully identified communication pattern differences consistent with qualitative research expectations, demonstrating the methodology's potential for supporting policy research at scale.

This proof-of-concept establishes a reproducible methodology for analyzing stakeholder communication patterns in environmental policy research, ready for application to larger datasets.

Complete text processing workflow from raw interviews to analysis-ready data

Integration of sentiment analysis, topic modeling, and frequency analysis

Domain-specific stopword development and text cleaning optimization

VADER sentiment analysis and LDA topic modeling with scikit-learn

Computational analysis methodology development for policy research applications